The Robots Aren't Coming for the Testers

This post originally was posted on Medium. Go check it out!

ChatGPT is already replacing jobs and information workers have real reasons to be worried. This week I discuss why I don’t think QA and Testing jobs will be replaced. Changed certainly, but not replaced.

If you haven’t read my initial thoughts on the impact of Large Language Models to engineering, I suggest you start there since this post builds on those thoughts. My thoughts here are not as fully formed because things are rapidly changing in our industry and I’m learning more daily.

I hope this sparks some new ideas for you and if it does, I’d love to chat about them!

Last week I made three bets

- LLMs will quickly become the normal starting place for development teams starting a new project. Within 2 years engineers won’t think twice about using them, just like Google or StackOverflow now.

- Lead engineers / architects become more valuable to a team but their roles will change. They’ll still have to set the technical strategy but they’ll spend more time reviewing the work of more junior team members to make sure it fits the strategy.

- The ratio of Engineering to QA moves meaningfully to QA in the next 18 months; maybe 25–30% more QA. This might mean hiring more specialists, or it might mean your existing team shifts their priorities.

All three bets are different angles of the same theory:

Software is about to be a lot cheaper, easier, and faster to produce in bulk. Without guardrails, it will also be much poorer quality.

Implications of this to business

If software is cheaper, easier, and faster to build there are a few implications:

Businesses will solve more problems with software

It will be possible to solve problems that were too expensive or complicated to solve before. Many of these will be small internal efficiency gains but enough small gains add up to huge impacts on the bottom line. This feels similar to the gains made optimizing a lot of little steps in an assembly line.

Some of these will be much bigger things that a traditional software engineering team would be relevant but that were too much to tackle before with the team at hand. We’ll come back to this in a second on 3).

Less experienced developers or even non-developers will do a lot of new work outside of traditional tech teams

This has always been happening. I didn’t find a current number but in 2015 Microsoft said 1.1 billion people used their productivity tools including Excel. I bet the Google numbers are similar.

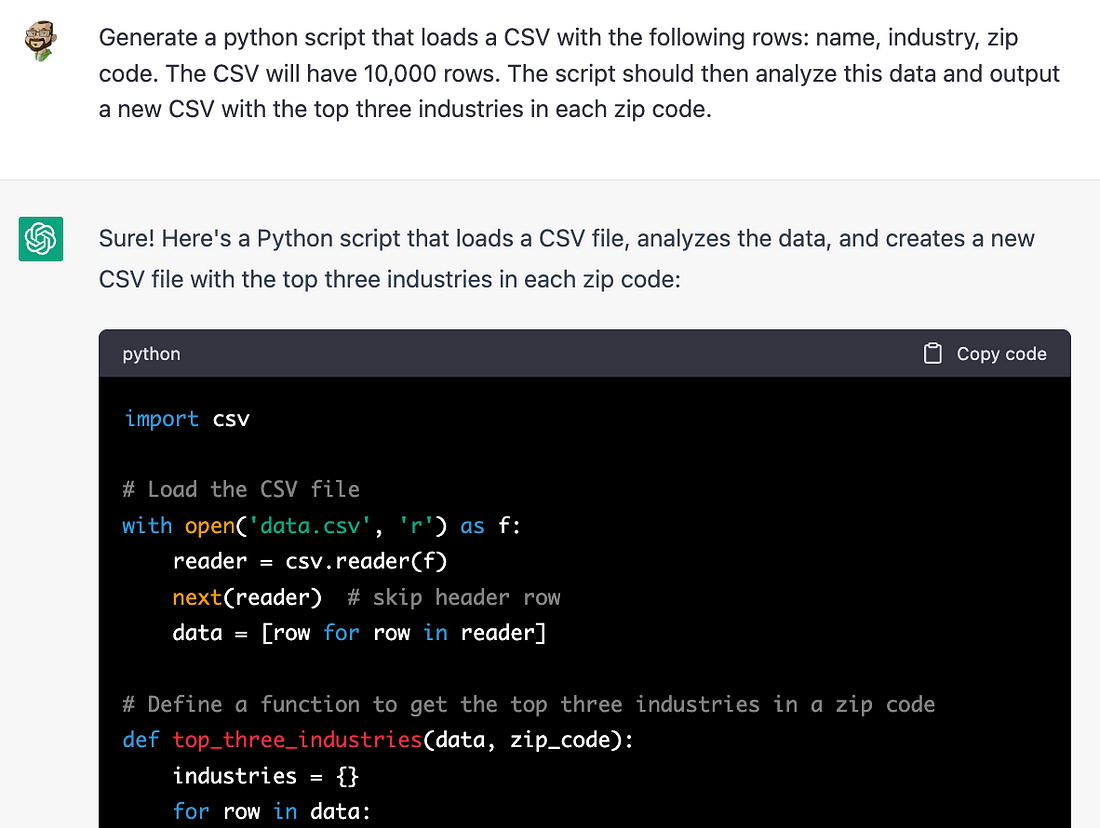

Now imagine a world where someone can ask ChatGPT to generate Python code to analyze data for them.

Actually, don’t imagine it. You can just ask ChatGPT.

(Note: I didn’t run this code but I have done similar experiments where I actually did and it was pretty close to working with a few minor changes)

Expectations of traditional tech teams will increase

The ability to ask ChatGPT for code won’t be limited to business users. Engineering teams will do the same things and integrate this code into public facing, mission critical apps where security, performance and reliability are critical.

Businesses won’t be happy only applying the efficiency gains we talked about above to small internal things. They’ll demand to see their big showcase software accelerate too.

Bet: Once executives see accounting or marketing get huge efficiency gains from using LLMs they’ll demand their highly compensated engineering teams do the same. They’ll be right to do so.

The quality of all of this software is going to be poor

While professional software engineering artisans still produce bugs, we assume some level of inherent quality in the individual components they create. The most nuanced bugs are usually found when each piece of craftwork has to plug into a bigger system.

If the same software engineers are now producing 2x (or more!) code, how are they going to maintain any level of quality?

Now remove the artisan from the system whom we count on for some automatic level of quality and security and hand the tools to people with other skill sets. Then crank out 5x or 10x as much software. You can probably see where this is going.

What does this mean for Quality Assurance and Testing roles?

I think there are two dimensions where things will change for people in quality focused roles.

A lot more automated testing is going to be needed

Manual testing doesn’t scale well as teams grow today and it will scale even more poorly with GPT-driven development. Ideally, engineers will wrap their code with unit tests but more code means more regressions across a complicated system.

A lot of teams already use some combination of engineer-written tests and automated testers. Whatever the strategy here, the amount of testing will need to increase. A lot of teams will try to solve this by hiring more testers.

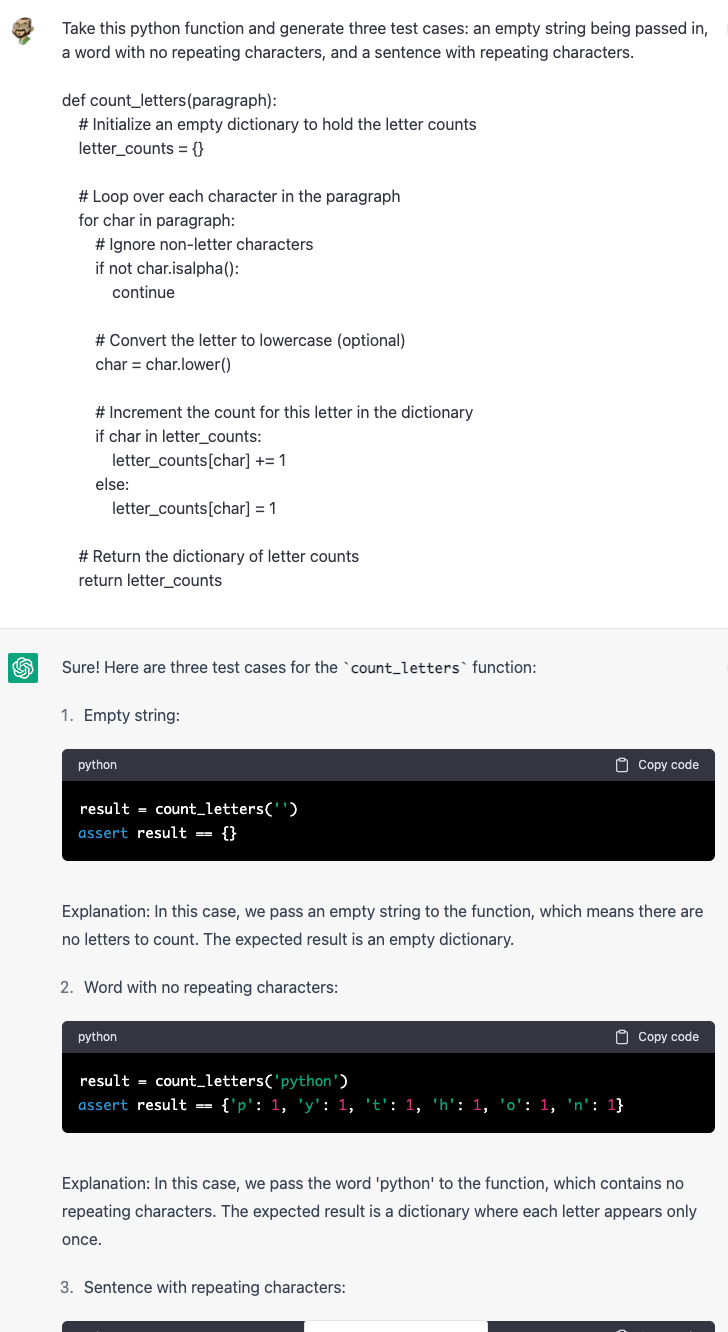

GPT can help with automated tests so the pool of people who can be an automated tester today just increased. You can ask GPT to write tests as easily as you can ask it to write code. This will be a huge help to anyone approaching testing from a less technical background.

A Quality Strategy will be critical

It will be difficult to test everything, all of the time. In particular, it will be impossible to test the code being generated inside of business departments and outside of standard development processes.

Quality and Engineering leadership will need to have defined, published, and enforced architectural and quality strategy that everyone buys into. This strategy needs to describe how systems integrate, how software boundaries (where testing can easily occur) are defined, and what can and can’t integrate with critical systems.

Bet: Quality Assurance teams in software become more like QA on an assembly line.

They might not test everything but they will sample systems as they evolve and compare them to the strategy. When issues are found, they’ll work to improve processes to prevent that issue again.

I think these quality experts will have to partner with power users in the business to try to instill the same quality principles that are mandated in engineering. They will definitely need to police the boundaries to make sure nothing flows into mission critical systems that don’t follow quality practices.

For mission critical software, more QA people will be needed

For internal software this might not be the case, but for customer facing software (particularly with any sensitive data or in regulated industries) more QA people will be needed.

Software complexity and risk don’t scale linearly with lines of code. More code produces more complex interactions between units of code, and the surface area for defects increases exponentially.

Bet: Software teams will see more and more costly defects when they start using LLMs to accelerate their work. Many teams will respond by hiring more testers.

I don’t have a fully formed view of how this will work but I think we’ll also see a need for expanded and different quality teams on SaaS products and in regulated enterprises like healthcare or finance. These expanded quality teams will combine traditional testing with security and compliance awareness.

Bet: In the next couple of years we’ll hear of an entirely new role inside of software teams that’s the evolution of Quality Assurance.

I don’t know what this is, but I hope it’s not another terrible evolution of the AbbreviateEverythingOps naming convention like DevSecTestOps.

What’s next?

I expect to come back to the topic of GPT and other LLMs on Quality in the future when I’ve had more time to reflect.

Next week however, I want to dive deeper into the business impacts of this tech. I’ll touch on how I think executives should be thinking about how LLMs will eventually impact their businesses and what they should be doing right now to prepare.