What ChatGPT Means for Engineers Today

This post originally was posted on Medium. Go check it out!

ChatGPT is an amazing new tool that promises to help engineering teams do everything faster. But like using a microwave to make Thanksgiving dinner, faster is no guarantee of better.

What is ChatGPT really?

Large Language Models (LLMs) like GPT are powerful text generation tools; you can think of them as really advanced autocomplete. They’ve seen millions of patterns. Most of the work a team does is not completely revolutionary, and they can usually find something that matches.

ChatGPT is a chatbot layered on top of GPT. It’s a human user experience for a complex piece of technology.

There are already specifically trained tools like GitHub’s Copilot (which is based on GPT’s tech) that are focused on matching the code it’s been trained on with what engineers are writing. ChatGPT is a more general text completion tool or “stochastic parrot” but it does its job well. Specialty tools like Copilot do similar things but embed closer to the developer experience. I expect to see many competing products in 2023.

None of these LLMs are new brilliant general AIs. They won’t solve your business problems or innovate something completely new. None of them are autocomplete for your business strategy.

The hyperbole today is that LLMs are “changing everything”. I think this is true, but not in the “telegraph ended the Pony Express in 18 months” sense. Engineering isn’t going away.

This changes everything today in the “Google made it possible to find code on the internet” way that instantly made every developer twice as effective. This is another step in the evolution of engineers gaining knowledge that went from expensive and huge books about software languages to using the internet.

What does this imply about engineering speed?

Let’s take a simplified model of software engineering and say there are two basic modes of work:

- Mode 1 — Tactical delivery: deciding on how to implement features, figuring out how to use libraries and 3rd party tools, deciphering bugs, and actually writing code.

- Mode 2 — Strategic decision making: identifying product features that need built, technical investments that need to be made, and architecture and software patterns that need to evolve.

Mode 1 — Tactical Delivery

In Mode 1, LLMs are useful for generating boilerplate code (i.e. structural code that’s necessary but doesn’t have business value) and for exploring technology or patterns developers aren’t familiar with.

Most of the examples being talked about are boilerplate. The actual value is not here.

The real value in Mode 1 is for giving engineering a head start on complicated technical tasks. When working on complicated work, engineers can spend hours or even days exploring a concept before they get close to writing the first line of useful code. LLMs can short circuit that.

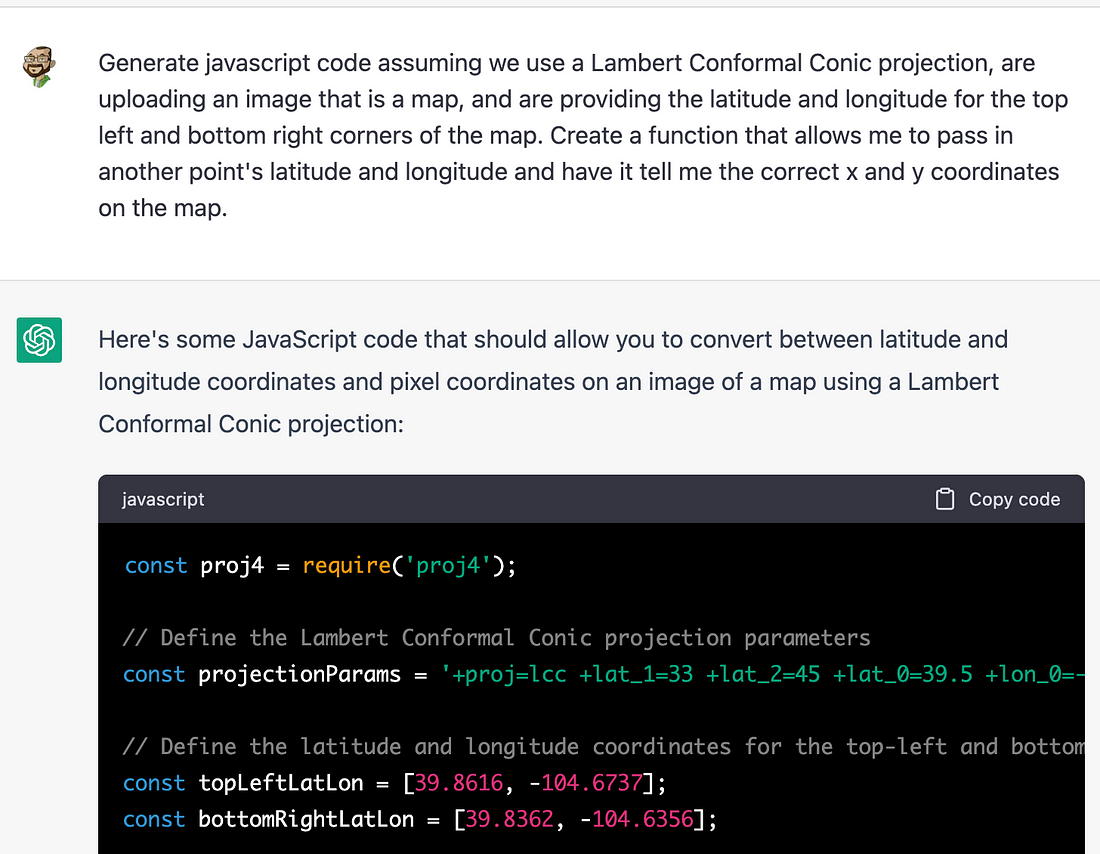

Here’s an example of me exploring code to work with maps and plotting. I’ve never worked with projections (i.e. how we flatten the roundish Earth to flat images) and would have no idea where to start. ChatGPT gave me a starting point that did a few things:

- Told me about a 3rd party library “proj4” that I’d never heard of

- Gave me a starting point for arcane configuration variables I’d have to figure out

- Gave me actual code I could then start to modify

ChatGPT is surprisingly good at telling you why it did something. You can ask it why it used a library, or ask it where a bug is likely going to happen. I’ve found that for routine tasks it does a good job telling you why and not just what, if you ask.

My bet — LLMs will quickly become the normal starting place for development teams starting a new project. Within 18 months engineers won’t think twice about using them, just like Google or StackOverflow now.

Mode 2 — Strategy

Mode 2 is harder to predict and a little different. GPT is already pretty good at laying out how you’d solve a specific problem but it’s not great at suggesting how to make decisions that will set you up for success 6/12/18 months from now.

This kind of information is probably not heavily represented in the training data because most companies don’t publish their strategy. It’s also highly unique to each team.

My bet — Lead engineers / architects become more valuable to a team but their roles will change. They’ll still have to set the technical strategy but they’ll spend more time reviewing the work of more junior team members to make sure it fits the strategy.

It’s unclear to me if this means team’s will need more senior people and become barbell shaped (lots of seniors, few mids, lots of juniors) or stay pyramid shaped.

So what about quality?

Quality is going to take a hit and testing post-development is going to be even more important than it already is.

Why? None of the code ChatGPT gives you went through the normal learning cycle of engineering:

- Engineer has an idea and writes code; that code fails

- Engineer learns from the failure and modifies the code, learning more about how it works along the way (repeat many times)

- Engineer has deep knowledge about how the code works and where likely failure points still are

- Code is ready for explicit testing

None of it is test-driven where the rules for how the code should (and shouldn’t) work are defined ahead of time. Engineers may not fully understand the inner workings of their code. In my example above, I have zero idea what the “projectionParameters” really mean. If they work, I probably won’t go figure it out either.

For internal projects, particularly small one off projects to serve a specific business function, this probably doesn’t matter at all. The ability to solve these projects just got much faster and cheaper.

For external projects and anywhere security, data integrity, and reliability are key, the need for QA just expanded. This code needs to be treated as untrustworthy until it’s heavily exercised by people trying to break it.

My bet — The ratio of Engineering to QA moves meaningfully to QA in the next 18 months; maybe 25–30% more QA. This might mean hiring more specialists, or it might mean your existing team shifts their priorities.

What’s next

Next week I’ll write more on how LLMs will empower QA professionals and anyone testing code. In particular they will make automated testing cheaper and feaster.

After that, I’ll write about how a business leader should think about balancing speed and risk. I’ll also talk about how you can start future proofing your team to use tools like Copilot and ChatGPT.